Call us toll-free: 800-878-7828 — Monday - Friday — 8AM - 5PM EST

By Vicky Mahn-DiNicola, RN, MS, CPHQ, and Kim Charland, BA, RHIT, CCS for VBP monitor

The healthcare industry is almost five months into ICD-10 implementation. While the conversion seems to have gone rather smoothly, it may be too early in the game to evaluate the impact of how coded data has impacted quality measurement. However, because there is not always a one-to-one code relation from ICD-9 to ICD-10, and because the meaning of ICD-10 codes can differ from the ICD-9 definitions, it is almost certain there will be changes in the way populations of interest are defined.

One area that will probably be impacted is in the reporting of quality data. The following is an interview with Vicky Mahn-DiNicola, vice president of research and market insights at Midas+ Xerox, that was conducted during ICD10monitor’s Talk-Ten-Tuesdays weekly internet broadcast show on Tuesday, Feb. 9.

Question: Vicky, you represent Midas+, which is one of the country’s largest and most experienced healthcare analytics and core measure vendors. How do you think the transition to ICD-10 measures will impact quality measures that are currently being collected and reported to CMS (the Centers for Medicare & Medicaid Services) and The Joint Commission for Core Measures?

Response: This may be the burning question of the hour, but I think it is too premature. While on the surface it seems pretty straightforward to crosswalk the 9s and 10s in order to create the qualifying cases in a core measure topic area, we need to stay mindful that there could be some shifts in the volumes for some of these populations. For example, the coding rules to determine a principle diagnosis for sepsis are very different from (ICD-9 to ICD-10), and we could see some shifts in the measure denominators, due to either the changes in the sequencing rules or the proficiencies of coders. I don’t think anyone really knows yet what level of variation in performance data we will see across hospitals in these early years of ICD-10 adoption.

Question: Are there any national organizations that are looking at the potential variation in coding for core measures?

Response: I know that the CMS Work Group is working on this. I’m sure they will need at least two quarters of data to complete their analysis, and so I wouldn’t expect to see any results of their findings until the end of this year at the earliest.

Question: As far as other measures, such as the AHRQ PSI 90 Composite measure, which is risk-adjusted using claims data, do you think hospitals will be able to maintain longitudinal consistency as we transition from 9s to 10s?

Response: Apart from the fact that AHRQ hasn’t officially released the final measure definitions using ICD-10 codes, I think there will be some shifting in performance data … due to changes in coding practices. At Midas+ we are already seeing shifts in case volumes for clinical populations such as acute renal failure, ischemic stroke, respiratory failure, and pulmonary insufficiency, septicemia, and viral and other pneumonias. So far we believe that these shifts are due to the ways in which principal or secondary diagnosis codes are assigned. All of these changes in coding practice have the potential to either increase or decrease the number of encounters captured in a given metric, as well as their degree of risk assigned to them by the measure’s risk adjustment methodology. And remember, each time AHRQ changes their software version, there are changes in measure logic and coefficient weightings. So bottom line, I think hospitals are going to be challenged maintaining longitudinal consistency.

Question: Can you explain what you mean by longitudinal consistency as it relates to coding?

Response: Longitudinal consistency has to do with maintaining consistent meaning over time. Since codes represent concepts, we need to ensure that the codes we are using as we transition from one set of coding rules to the next have the same meaning within a measure definition or population of interest.

Perhaps the simplest example to illustrate this is with a measure that looks at acute MI mortality. Under ICD-9-CM, a myocardial infarction is coded as acute if it has a duration of eight weeks or less. This time period is four weeks under ICD-10-CM.

Since a relatively high percentage of acute MI mortalities tend to happen around six weeks post-event, when the injured area of the heart has become ridged and less flexible, we could potentially see a decline in our overall mortality rates once we change the phenomenon of interest to four weeks rather than eight weeks. The integrity of the measure has changed because the concepts in our coding taxonomy are different. This is lack of longitudinal consistency. It has more to do with measure construct than coding practices, but we need expert coders to help us understand the differences.

There are many examples of this in the new ICD-10 world. It’s a lot more complex than a GEMs (general equivalency mappings) crosswalk. Once we start consuming the codes for performance management, research, and outcome evaluation, we really have to take a close look at our measures to see if there are any changes in meaning, particularly for measures we are tracking over time.

Question: So, what advice would you give to hospitals that are monitoring their performance data over the next 6 to 18 months?

Response: I think hospitals should very quickly evaluate any metrics used for OPPE, board reports, department performance, or any hospital targets that are tied to executive bonuses. I would start by monitoring time-trended data in SPC charts to identify any significant variation. Next, I would lay out the measure definitions from ICD-9 and compare them to definitions using ICD-10. Not only do you want to ensure that the crosswalk between 9 and 10 is complete and correct, but you also want to understand whether the new codes are different in concept due to coding definitions or sequencing rules – and I would support quality teams to include a medical records coder on your teams for this work. Bottom line, from my perspective, ICD-10 is a game changer. Unfortunately, I think that the general expectation in the industry is that performance measurement and quality vendors maintain longitudinal consistency for their clients, but hospitals need to understand that ICD-10 coding practice will take some time to equilibrate, and will likely impact quality measures in ways that will be felt for several years.

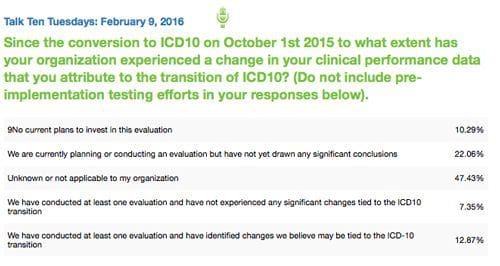

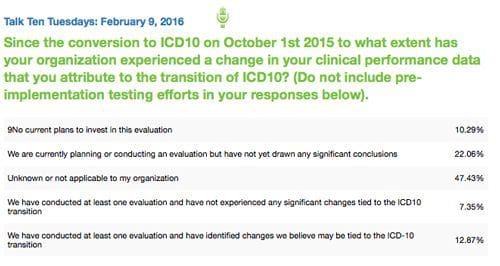

Each week during the free 30-minute Talk Ten Tuesdays broadcast, the audience is polled on current ICD-10 topics of interest. This week’s poll was based on Vicky’s interview:

Question: The results of our poll indicate that over 42 percent of respondents are engaged in some form of evaluation work related to the impact of ICD-10 coding on their quality measures. What insights can you offer about these results?

Response: I’m actually very pleased to see that so many hospitals are being vigilant about the impact of ICD-10 coding on their performance measures, and I would not be surprised to see these numbers go a bit higher in the months ahead. I think the challenge is going to be around pinpointing exactly how the coding process is contributing to these shifts in performance, versus a change in quality measure concepts. In addition, since coding is ultimately dependent upon clinical documentation, I wonder how the adoption of EMR (electronic medical record) technologies will impact coding. It would be interesting to conduct research on how coding varies across organizations in relation to the various EMR systems that are currently in use. I would anticipate that a great deal of insight will be forthcoming from AHIMA, the National Quality Forum, CMS, AHRQ, and The Joint Commission over the next year. I would encourage hospitals and healthcare systems to remain vigilant in their analysis and continue to share their learning with their performance measurement vendors and regulatory agencies over the months and years ahead.